The Hidden Discipline Behind Successful Software Development Agents

Almost every complaint about AI agent tools going off the rails reduces to one problem: if AI is misbehaving, you haven’t built your project with enough rigor and structure to enable autonomous development.

The irony is that building effectively with agents forces you to face fundamentals many engineers avoid. Why? Because writing code feels more satisfying than thinking about code. We all know this. No matter how mature we are, the urge to start coding first is irresistible. YOLO.

If you give in to that impulse with agents—and I have—you’ll paint yourself into a corner of doom. You’ll end up posting on Reddit about the AI bubble, listing all the ways your bot ruined your life. Agent frameworks and tools like v0, Bolt, Lovable, and Figma’s Make encourage this bad behavior even more - but it feels so good!!! The paradox is that AI requires the boring stuff if you want it to work. Stay strong.

The tasks engineers historically dislike—documentation, testing, CI/CD, deterministic success gates, metrics, squeaky clean containerized dev environment, upfront product definition, design, structured logging, cloud orchestration—are quickly becoming the majority of the job.

And this doesn’t just apply to engineers. Product managers and designers are in the same boat. We’re converging toward a single builder role. That’s how the most productive creators have always worked. Before AI, it was easy to lean on other specialists instead of wearing multiple hats. Now, wearing multiple hats is the only way to build efficiently.

There are teams using agents with discipline in both greenfield and legacy environments. They’re sipping coffee or sleeping while Claude Code is doing their work.

A great example is this essay and quote from Sunday Letters:

I think what we are starting to see is that the “pure AI code” maximalists are finally getting to the point where their systems work well enough (and the models are also getting good enough to support these ideas).

Maybe a success metric for teams should be "time since last human intervention"?

We have a choice: remain slow, error-prone, and overworked, or multiply ourselves through agents to build faster, better, and more profitably. If you love building, you’re in a new universe of productivity—and it’s just beginning.

What I’ve learned so far

I’m not at an end state, but it’s becoming impossible to work effectively without strong fundamentals. As I’ve become less afraid of AI and more excited about multiplying my output, I’ve realized the primary goal is getting GPUs to work for me while I’m not at the keyboard, and to never stop. YOLO. I’m getting closer every day, with more to do.

Here’s my current checklist when I spin up an agent-driven project.

Engineering foundations

- A well-defined, documented workflow keeps the agent aligned.

CLAUDE.md,AGENTS.md, and Cursor rules establish best practices and expected workflows.- Subagents for specific tasks and parallelization. My defaults are a DRY refactoring subagent and an architect-level engineer specialized in my stack.

- Commands for complex, repetitive tasks.

- My favorite command is

/build-from-spec. It reads a file or text input, then runs the cycle of product development: read the spec → plan the development todos → create a new branch → implement → write tests to 100 percent coverage → fix issues → confirm with Playwright screenshots → fix issues → lint → fix issues → update docs → update AI instructions → update the issue with details → commit → push to branch → create a PR tied to the issue.

- My favorite command is

Claude and review flow

You’ll reach a point where you’re mostly approving work that’s already been reviewed. Enable Claude reviews and comment @claude on PRs to process them asynchronously. By the time you look at the PR, it’s either ready to merge or you tell @claude to fix the issues via GitHub Actions with a simple comment on the PR. With a good testing and release pipeline, we’ll get to automatic releases - at least to DEV first, then prod.

Tests and gates

- High code coverage is non-negotiable. Aim for 100 percent. Yes I said it.

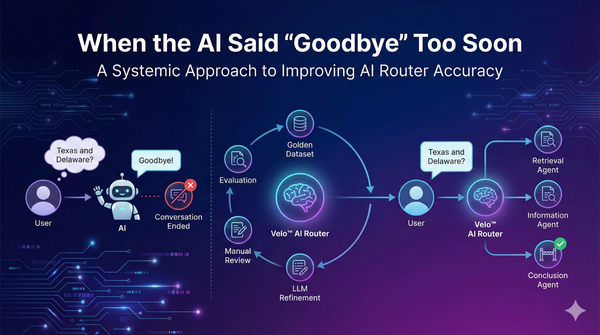

- With high coverage, tests become a deterministic gate for agents. AI will get things wrong; the more deterministic gates you have, the more it retries until it gets it right.

- Pre-commit and pre-push hooks must run tests, lint, and build. Agents can hallucinate or give up; hard gates keep them honest. These hooks also encourage enabling AI to commit and push, which is critical.

Branches, PRs, and worktrees

- Work only through branches and PRs. Let the agent go wild; worst case is a wacky PR.

- Worktrees help isolate contexts and speed up parallel efforts.

Devcontainers and YOLO mode

- Run Claude Code inside a devcontainer. Use

--dangerously-skip-permissions. - Devcontainers encapsulate your MCP setup and other complexity embedded in the workflow.

- Devcontainers isolate your agents network access

Core Tools / MCPs

- Issue tracking plus Playwright are essential.

- If you don’t use Atlassian (

mcp-tlassian), use GitHub Issues. Agents are excellent with theghCLI and it’s one less MCP. - If you don’t use GitHub Issues, keep Markdown files in a

/docsfolder to store historical feature development. - Configure Playwright to generate low-resolution screenshots so they don’t eat context.

- Integrate Playwright into the workflow so the agent verifies its own work visually.

- Note that the Amplifier project mentioned in the post above is an interesting look into the kind of workflows "pure ai" teams are spending their time on. While I am personally not using it, it is a fascinating view into what teams who are committed to automation are doing.

Amplifier is a coordinated and accelerated development system that turns your expertise into reusable AI tools without requiring code. Describe the step-by-step thinking process for handling a task—a "metacognitive recipe"—and Amplifier builds a tool that executes it reliably. As you create more tools, they combine and build on each other, transforming individual solutions into a compounding automation system.

Patterns and principles

- DRY refactor always. I ask the refactor subagent to do a DRY analysis after every major change, or after a few minor changes. Keep code small and reduce reuse traps, or agents will bloat the code.

- Think one level higher, then go up a level.

- Got it to write tests → get it to write code.

- Got it to write code → get it to write stories.

- Got it to write stories → get it to build designs.

- Got it to build designs → get it to run a design sprint and develop an epic.

- Got an epic → use subagents and worktrees to parallelize development.

- Build one thing per context, and parallelize contexts.

The bigger picture

People say AI will replace engineers. That’s the wrong frame. Every role in the product lifecycle—engineering, design, product management—is being reshaped.

If you know how to build software and apply the rigor required for long-lived systems, you’ll thrive. If you don’t, you’ll struggle. AI doesn’t care about titles; it amplifies structure and discipline.

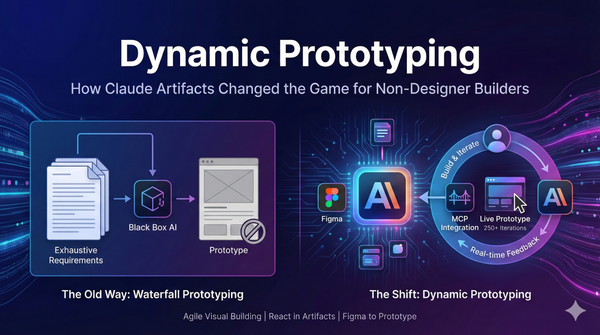

For me, most agent development now starts from feature-level Markdown specs—my own designs, turned into working products with AI.

So let’s stop worrying about losing one job and start six companies at the same time.

** Special thanks to Bryon Jacob, who’s forward thinking leadership in this space continues to challenge me to YOLO all the things. Thanks Bryon!