Smarter Bot Defence strategy against Bot Attacks with Multi-key Rate Limiting

The Rise of Malicious Bot Traffic

The internet is now dominated by automated activity. According to the 2025 Imperva Bad Bot Report, bots generate over 51% of all web traffic, and nearly 37% of that is malicious. In other words, more than two-thirds of automated requests online are from “bad bots”, a systems that is designed to scrape data, overload infrastructure, or exploit vulnerabilities.

At ZenBusiness, we see this reality every day. Automated attacks can quickly strain or even take down critical services if not properly mitigated.

Defence in Depth and Its Challenges

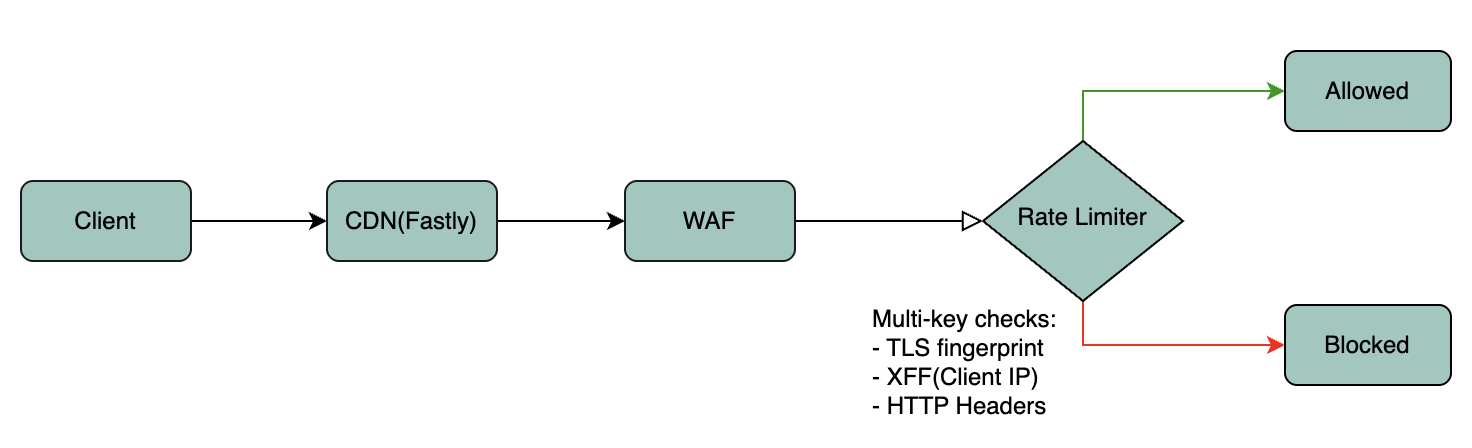

While our systems follow the defence-in-depth principle; so many enterprise organizations follow this approach by creating a multi layered architecture with segmentations; using VPCs, private subnets, managed clusters, network firewalls and other form of abstractions.

Despite this combinations, these systems still face challenges by sophisticated bots. Not the simple crawlers of the past, these bots adapt, evolve, and mimic typical human behavior.

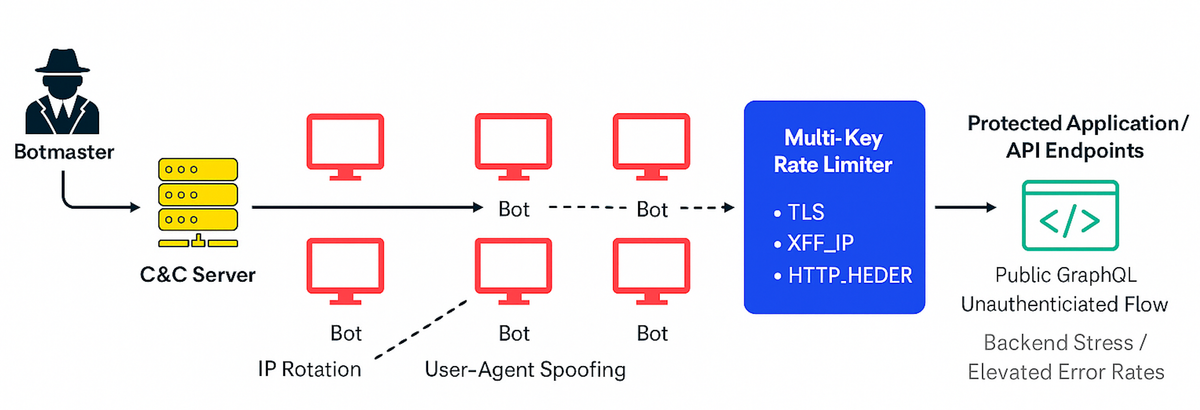

In recent times we have seen bots evade defences using tactics like:

- Distributed and IP Rotation :- continuously cycling through IPs to evade per-IP rate limits and reputation filters.

- User-Agent Spoofing :- by crafting headers to impersonate legitimate browsers or mobile apps.

- Hiding malicious code :- scrambling and encrypting their code to make it difficult for web application firewall(WAF) and Security Information and Event Management(SIEM) software to analyze and detect their malicious signatures.

These techniques often target high-risk endpoints like public GraphQL APIs or unauthenticated flows which leads to backend overload, higher error rates, and degraded user experience.

The Limits of Traditional Rate Limiting

Rate limiting is a cornerstone of WAF defence, helping control how often requests can hit specific endpoints. We tune our limits dynamically thereby tightening restrictions on higher-risk APIs.

But traditional rate limiting has limitations when it relies on a single identifier, such as:

- IP address (XFF_IP)

- User-Agent header

This approach falls short because:

- IP-based limits fail when bots use proxy networks or botnets with rotating IPs.

- User-Agent filtering becomes unreliable once attackers mimic real browsers.

Worse, tightening rate limits too aggressively can accidentally throttle legitimate customers, creating friction and potential business impact.

A Smarter Solution: Multi-Key Rate Limiting

To counter these evolving threats, a recommended approach is to adopt a multi-key rate limiting strategy, a smarter and more contextual way to identify traffic patterns.

Instead of depending on a single attribute, you should combine multiple identifiers (“keys”) to build a richer picture of request behavior.

Enforcement Key | Why It Works |

|---|---|

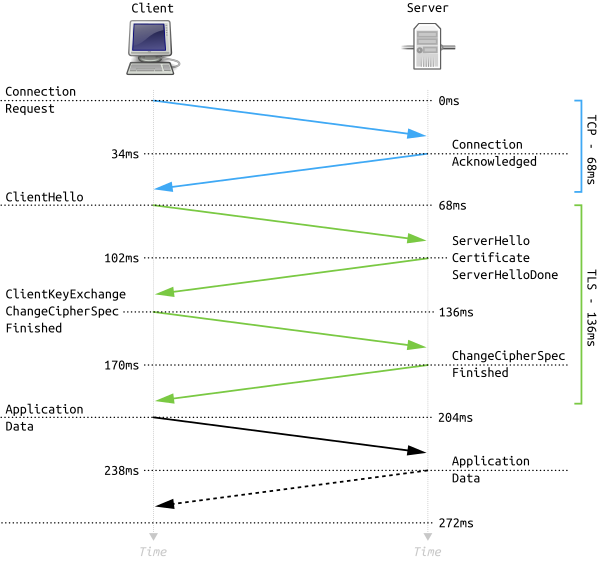

TLS_JA3 Fingerprint | Tracks unique TLS handshake patterns, difficult for bots to spoof even when rotating IPs. |

XFF_IP | Captures the original client IP, even behind proxies or CDNs. |

HTTP Headers (e.g., User-Agent) | Adds behavioral context for advanced fingerprinting. |

By correlating these keys, we create unique traffic buckets. Even if a bot changes its IP or fakes headers, its TLS fingerprint usually remains consistent thereby allowing WAF to accurately flag it as malicious.

Looking Ahead

Bots will continue to evolve but so should defenses. At ZenBusiness, we’re committed to building adaptive and data-driven security controls that protect our customers and infrastructure without compromising performance.

Multi-key rate limiting represents a crucial step forward in that mission, turning static defenses into intelligent, behavior-aware systems that stay one step ahead of attackers.